18 January 2017

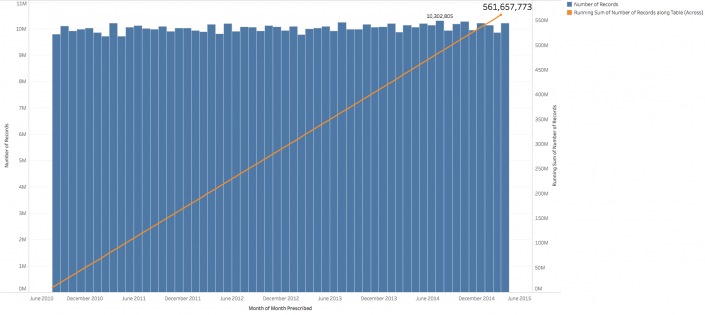

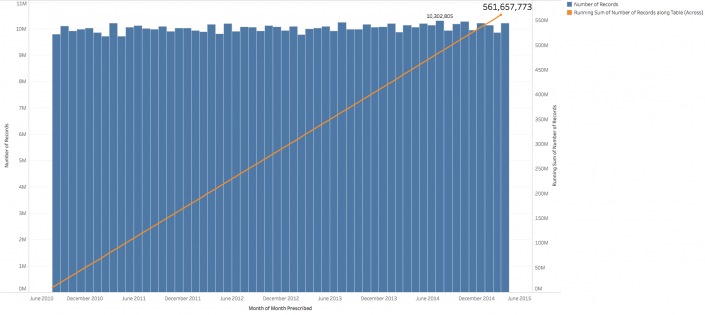

For a while now, about 2 years, I've been playing with Exasol. My introduction to it was through a client who was very excited about pulling all their data puddles, whether that data is in Excel, MS SQL, or from third parties, into one single data lake. They chose Exasol because it reverses the ETL (Extract, Transform & Load) method to ELT (Extract, Load & Transform) which is possible due to powerful data ingestion, transformation & querying capabilities.I was fortunate enough to talk Exasol into giving me a demo environment and I immediately fell in love. At the time I had access to live airplane locations from around the world and was pulling the data down with Alteryx, loading it into Amazon's Redshift database. An internal training event however showed me a big limitation of Redshift...concurrency. I tried to get 12 people to all use the data at once and after 10 mins everyone was locked out.Not to worry though I had a new tool to play with. After a CSV export from Redshift into S3, an IMPORT statement in Exasol and gigabytes of data were available in just 12 mins. Yes...222,646,564 records imported in just 12 minutes. It was so fast you felt that (A) you must have done something wrong or (B) it had just failed, but without an error. In fact now I think back it could have completed sooner had the internet been faster. We should make the internet faster!Anyway, I digress. If my first big import task was fast, my second import task blew me away. This time it was UK NHS GP Prescribing data avialable in monthly CSV files. Now after importing one CSV file from S3, I wondered if I could import every single monthly CSV direct from a public government website. 26 minutes after setting off ~60 SQL IMPORT statements and I had 5 years of prescriptions data and 561,657,773 records. So this is all great, but remember how I got hold of this Exasol instance, it was provided by Exasol. A fast database is no good if it takes a team of computer science graduates days to implement it. So how about we put this to a real life test? Here's the scenario:

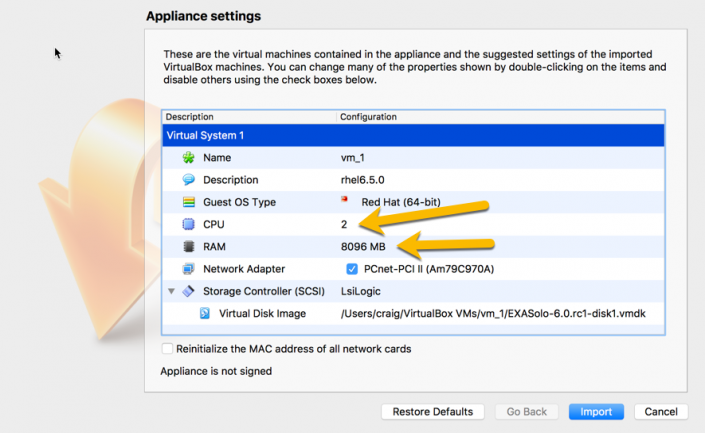

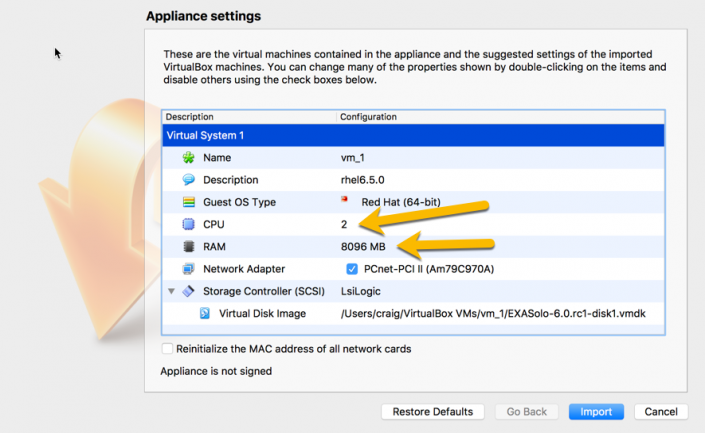

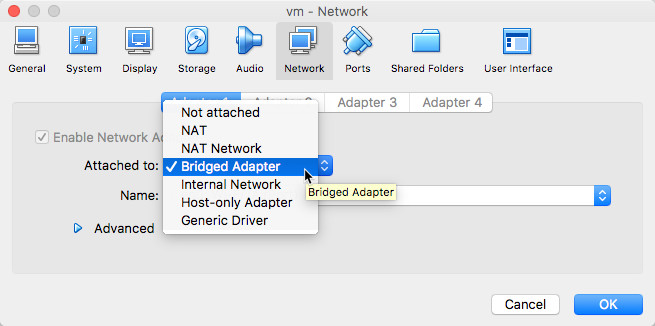

So this is all great, but remember how I got hold of this Exasol instance, it was provided by Exasol. A fast database is no good if it takes a team of computer science graduates days to implement it. So how about we put this to a real life test? Here's the scenario: I changed the CPU value to 2 & RAM to 8096 MB. I've got 4 cores and 16GB RAM on my machine, I can spare half for Exasol.20 mins (12:30) - Tried to Start Virtual Machine. Network adapter failed.Once the import completed there seemed like no better time than to hit the big 'Start' button in VirtualBox. Trouble was that the imported image is configured for a network adapter that didn't exist on my machine. So I went into Settings -> Network and at first I changed Adapter 1 to be 'NAT'.

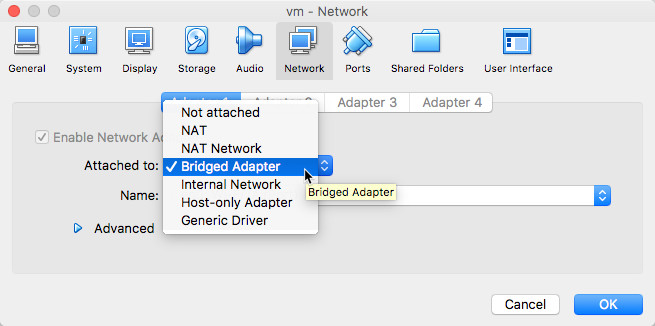

I changed the CPU value to 2 & RAM to 8096 MB. I've got 4 cores and 16GB RAM on my machine, I can spare half for Exasol.20 mins (12:30) - Tried to Start Virtual Machine. Network adapter failed.Once the import completed there seemed like no better time than to hit the big 'Start' button in VirtualBox. Trouble was that the imported image is configured for a network adapter that didn't exist on my machine. So I went into Settings -> Network and at first I changed Adapter 1 to be 'NAT'. I would later learn that 'Bridged Adapter' was what I wanted. My goal was to have the Exasol DB get an IP address from my network DHCP just like any other computer on the network would. What 'NAT' does is start up a local DHCP and assign Exasol a local IP address that can't be seen from my network.I started Exasol no problem but when I couldn't connect to it decided to go get some lunch. Over a hot meal I decided Bridged was probably more appropriate to try.

I would later learn that 'Bridged Adapter' was what I wanted. My goal was to have the Exasol DB get an IP address from my network DHCP just like any other computer on the network would. What 'NAT' does is start up a local DHCP and assign Exasol a local IP address that can't be seen from my network.I started Exasol no problem but when I couldn't connect to it decided to go get some lunch. Over a hot meal I decided Bridged was probably more appropriate to try.

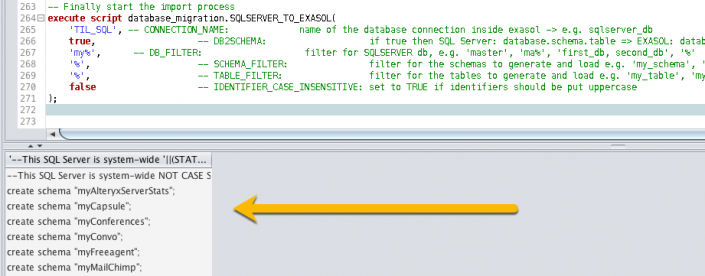

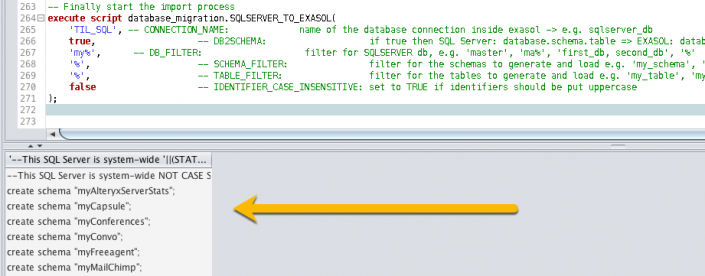

Paste the Github code into the SQL Editor and scroll straight down to the bottom.

Paste the Github code into the SQL Editor and scroll straight down to the bottom. in Exaplus. After a few seconds (depending on the number of databases, tables & network connection speed) you'll see a whole bunch of records returned in the output window:

in Exaplus. After a few seconds (depending on the number of databases, tables & network connection speed) you'll see a whole bunch of records returned in the output window: We're not done yet! We need to run all of this output to actually do the import. The script just gave us the SQL code we need.

We're not done yet! We need to run all of this output to actually do the import. The script just gave us the SQL code we need.

So this is all great, but remember how I got hold of this Exasol instance, it was provided by Exasol. A fast database is no good if it takes a team of computer science graduates days to implement it. So how about we put this to a real life test? Here's the scenario:

So this is all great, but remember how I got hold of this Exasol instance, it was provided by Exasol. A fast database is no good if it takes a team of computer science graduates days to implement it. So how about we put this to a real life test? Here's the scenario:The Scenario

My company's been using Microsoft SQL Server since 1992 and hasn't upgraded it in a while. It's full of useful data, but it's slow to analyse. I want to point my modern BI tool Tableau at it, but there's lots of tables that need joining. I could do it all drag and drop in Tableau and use data extracts but the loading time for those extracts is going to be crazy. After all, who's ever heard of actually implementing a foreign key (joke DBAs, please don't send me nasty emails).I did however recently attend Tableau's Conference in Austin and ran into some very friendly Germans from Exasol. Can we make a copy of my entire SQL Server in Exasol? And can I do it over my lunch break (1 hour)?A Real Life Example

I did actually run this experiment. And here's how it went down.0 mins (12:10) - Started DownloadsExasol have a free community edition of their product available for download. It's limited to a 1 node instance and 200GB RAM. Well I have only one laptop and 16GB RAM so I think I'm fine.The download is available here http://www.exasol.com/en/download/It comes down as an virtual machine which means it runs completely self contained on your laptop, no need to learn linux to get it working. The download works with VirtualBox, VMWare Player or KVM. I opted for VirtualBox so it was off to https://www.virtualbox.org to download that too.3 mins (12:13) - Started installing VirtualBoxVirtualBox is an easy install and was done in 60 seconds.4 mins (12:24) - Imported Exasol Virtual MachineThe download completed, double clicking on it starts the import. I changed the CPU value to 2 & RAM to 8096 MB. I've got 4 cores and 16GB RAM on my machine, I can spare half for Exasol.20 mins (12:30) - Tried to Start Virtual Machine. Network adapter failed.Once the import completed there seemed like no better time than to hit the big 'Start' button in VirtualBox. Trouble was that the imported image is configured for a network adapter that didn't exist on my machine. So I went into Settings -> Network and at first I changed Adapter 1 to be 'NAT'.

I changed the CPU value to 2 & RAM to 8096 MB. I've got 4 cores and 16GB RAM on my machine, I can spare half for Exasol.20 mins (12:30) - Tried to Start Virtual Machine. Network adapter failed.Once the import completed there seemed like no better time than to hit the big 'Start' button in VirtualBox. Trouble was that the imported image is configured for a network adapter that didn't exist on my machine. So I went into Settings -> Network and at first I changed Adapter 1 to be 'NAT'. I would later learn that 'Bridged Adapter' was what I wanted. My goal was to have the Exasol DB get an IP address from my network DHCP just like any other computer on the network would. What 'NAT' does is start up a local DHCP and assign Exasol a local IP address that can't be seen from my network.I started Exasol no problem but when I couldn't connect to it decided to go get some lunch. Over a hot meal I decided Bridged was probably more appropriate to try.

I would later learn that 'Bridged Adapter' was what I wanted. My goal was to have the Exasol DB get an IP address from my network DHCP just like any other computer on the network would. What 'NAT' does is start up a local DHCP and assign Exasol a local IP address that can't be seen from my network.I started Exasol no problem but when I couldn't connect to it decided to go get some lunch. Over a hot meal I decided Bridged was probably more appropriate to try.22 mins (12:32) Lunch & Email...13:48 - Exasol is running

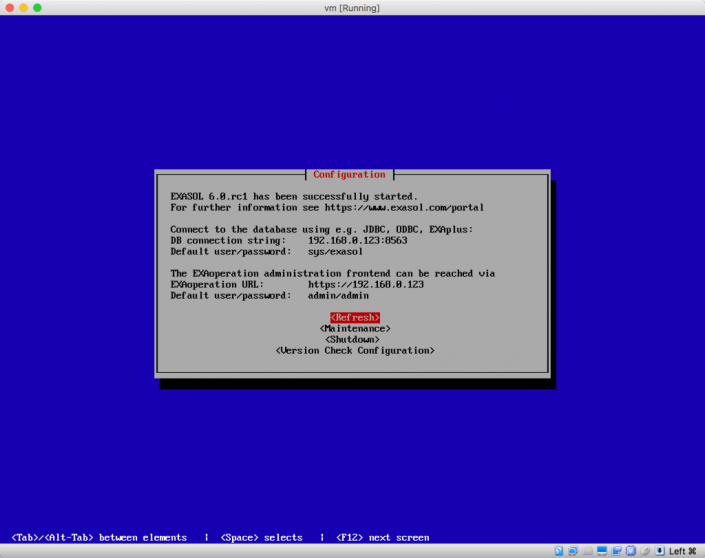

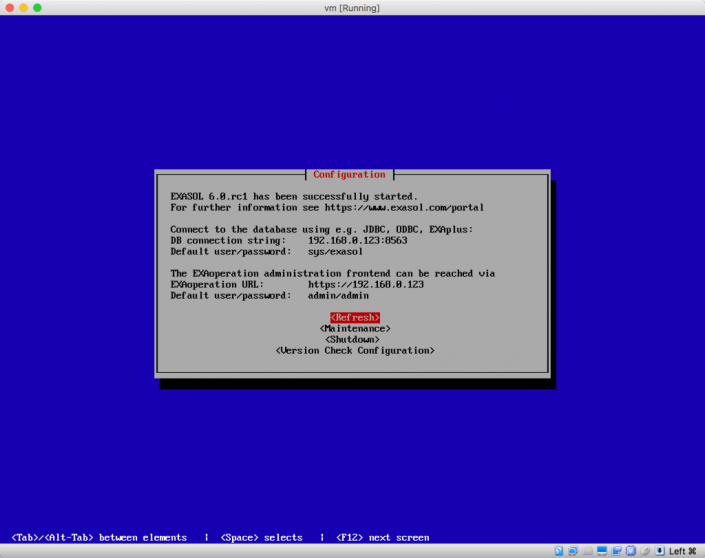

After some lunch and catching up on emails I had a working instance of Exasol with an IP address that matched my LAN pool. In case you're wondering this is what a running Exasol server looks like in VirtualBox:

32 mins (13:58) - Ran the SQL migration script

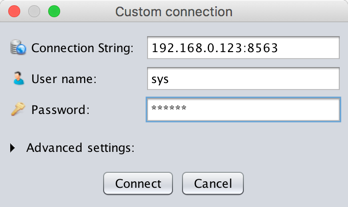

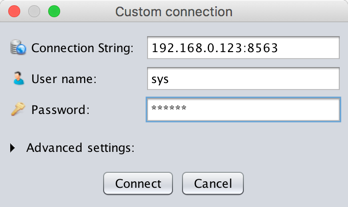

So with any new test database the bit that's most important is 'How do I get my data in? Can't you just copy my existing DB?'There's a not so super secret but definitely super useful script just waiting for you on Exasol's Github page. Choose your existing database flavour, copy and paste, and away you go...almost.In my case I used the SQL Server script https://github.com/EXASOL/database-migration/blob/master/sqlserver_to_exasol.sqlYou can use any database client you'd like which can connect using JDBC. Personally though I've kept close to home and used Exaplus (download from https://www.exasol.com/portal/display/DOWNLOAD/6.0). The details that you see in the VirtualBox console go into the connection screen: Paste the Github code into the SQL Editor and scroll straight down to the bottom.

Paste the Github code into the SQL Editor and scroll straight down to the bottom.-- Create a connection to the SQLServer databasecreate or replace CONNECTION sqlserver_connectionTO 'jdbc:jtds:sqlserver://192.168.1.42:1433'USER 'user'IDENTIFIED BY 'exasolRocks!';-- Finally start the import processexecute script database_migration.SQLSERVER_TO_EXASOL('sqlserver_connection', -- CONNECTION_NAME: name of the database connection inside exasol -> e.g. sqlserver_dbtrue, -- DB2SCHEMA: if true then SQL Server: database.schema.table => EXASOL: database.schema_table; if false then SQLSERVER: schema.table => EXASOL: schema.table'AdventureWorks%', -- DB_FILTER: filter for SQLSERVER db, e.g. 'master', 'ma%', 'first_db, second_db', '%''%', -- SCHEMA_FILTER: filter for the schemas to generate and load e.g. 'my_schema', 'my%', 'schema1, schema2', '%''%', -- TABLE_FILTER: filter for the tables to generate and load e.g. 'my_table', 'my%', 'table1, table2', '%'false -- IDENTIFIER_CASE_INSENSITIVE: set to TRUE if identifiers should be put uppercase);The find-replaces are pretty simple, but just to be thorough...- Change '192.168.1.42' to the network address of your SQL Server

- Change 'user' to the username you use to access your SQL Server

- Change 'exasolRocks!' (it does btw) to the password for your SQL Server

- You only need to change 'sqlserver_connection' (there's 2 occurrences) if you're planning on importing from multiple servers

- Are you importing multiple databases? Then leave DB2SCHEMA as true. If you're just pulling in one database switch it to false

- Which databases do you want to import? Change 'AdventureWorks%' to filter to the databases you want. The % is a wildcard so 'db%' will import all databases starting with the characters 'db'. If you just used '%' it'll import all your databases...why not.

- Same goes for the SCHEMA_FILTER and TABLE_FILTER

We're not done yet! We need to run all of this output to actually do the import. The script just gave us the SQL code we need.

We're not done yet! We need to run all of this output to actually do the import. The script just gave us the SQL code we need.34 mins (14:00) - Start data migration from SQL Server

Don't copy & paste each row one at a time. Select the first row, hold down shift, select the last row, right click & select copy. Start a new SQL Editor and paste in what we just copied. Finally hit that 'Execute all statements' button again, sit back and relax as your data flows into Exasol.Now obviously the time this process takes depends on the amount of data in your database and the number of tables you're importing. Just for reference I did my import over the web and here's the timing I experienced:14:03 - First table importing (450,531 records)14:17 - All other tables start importing14:21 - FINISHED

That's for 12 different databases each with approx 8 tables in. As you can see it took about 14 minutes to import 450k records. Most of the holdup there was probably my SQL Server running the Select * query.